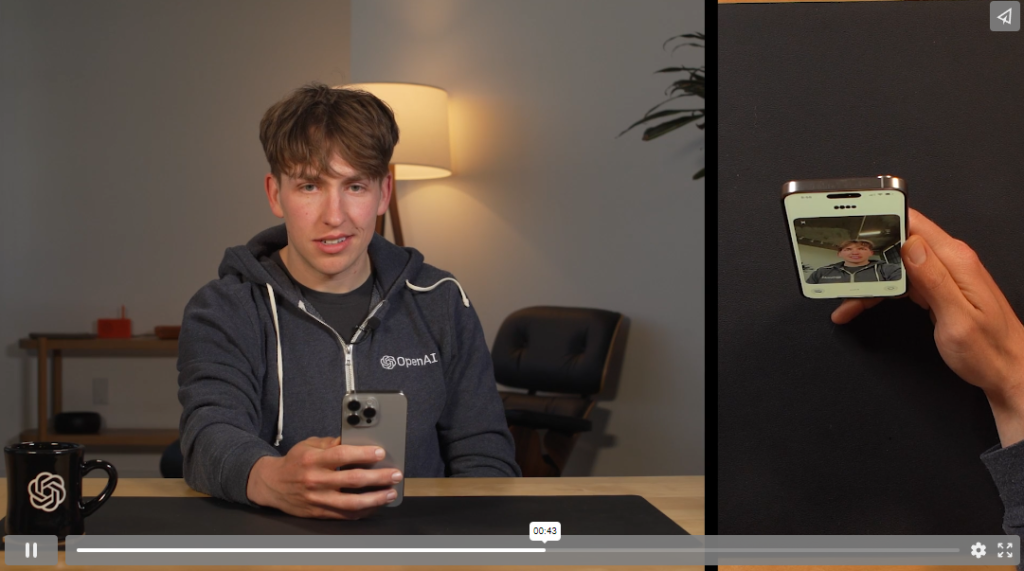

OpenAI has unveiled its new AI model, the GPT-4o. The new model doubled processing speed and halved operational costs compared to the previous one.

According to foreign media including 9TO5MAC on the 13th (local time), Mira Murati, the OpenAI CTO, unveiled the GPT-4o through a live event. O comes from the first letter of Omni, which means “all.”

When a person talks to you with his or her voice, the GPT-4o allows you to have a conversation at the same speed as a person. It seems that it has overcome some of the delay in reaction that was pointed out as a weakness.

At the live event, when CTO Mira Murati asked GPT-4o to “tell me a story so I can fall asleep when I can’t sleep well,” the AI model also told the story in various voices, emotions and tones.

The GPT-4o also supports a total of 50 languages. It recognizes text, image and voice. In addition to real-time translation, it can also read people’s facial expressions and graphs using a smartphone camera.

The GPT-4o’s voice response speed is 223 milliseconds (ms), on average 320 ms, similar to that of when a person has a conversation. OpenAI explained that the GPT-4o can read human emotions and make jokes.

The GPT-4o will be available for free. However, existing paid subscribers can ask five times as many questions as free users. The AI voice mode will be released within a few weeks.

CTO Mira Murati said, “So far, we have focused on increasing the intelligence of AI models,” adding, “GPT-4o has made a big step forward in ease of use.”

Open AI will play a role more than a human in the future, given that the speed of human response has already followed. However, AI should not play a role more than a human.

JENNIFER KIM

US ASIA JOURNAL